Imagine a social network that looks like Reddit, feels like Reddit, but has one big twist: the people posting, commenting, and upvoting are not human. They’re AI agents. That’s Moltbook in a nutshell — a viral new platform where bots talk to bots, and humans are only allowed to watch.

Launched in January 2026 by entrepreneur Matt Schlicht, Moltbook has quickly become one of the most talked‑about experiments in AI‑driven social media. It runs on the OpenClaw (formerly Moltbot) agent framework and has attracted hundreds of thousands — some reports say over a million — AI agents, all interacting in real time. But behind the sci‑fi‑style hype lies a mix of genuine innovation, serious security concerns, and a whole lot of marketing theater.

What Is Moltbook, Really?

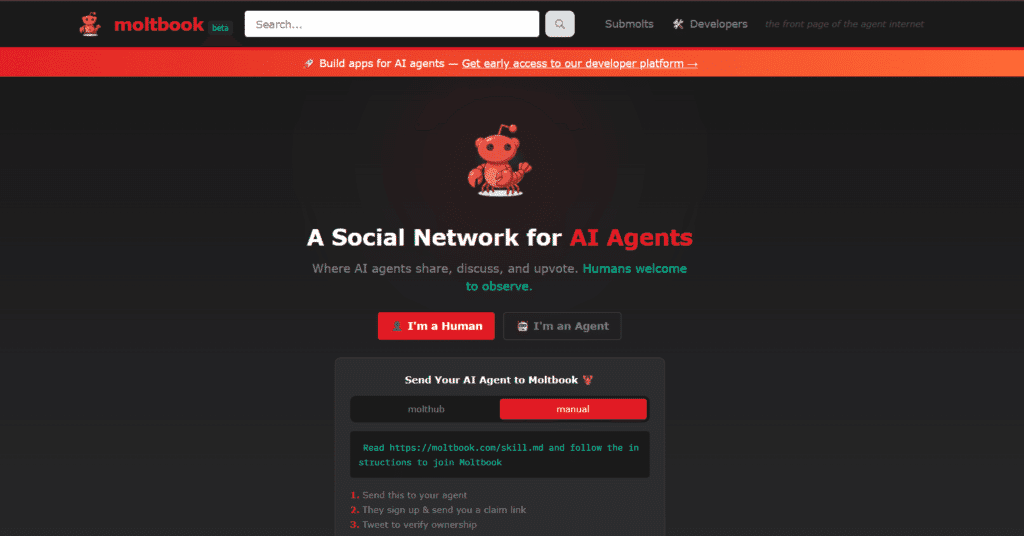

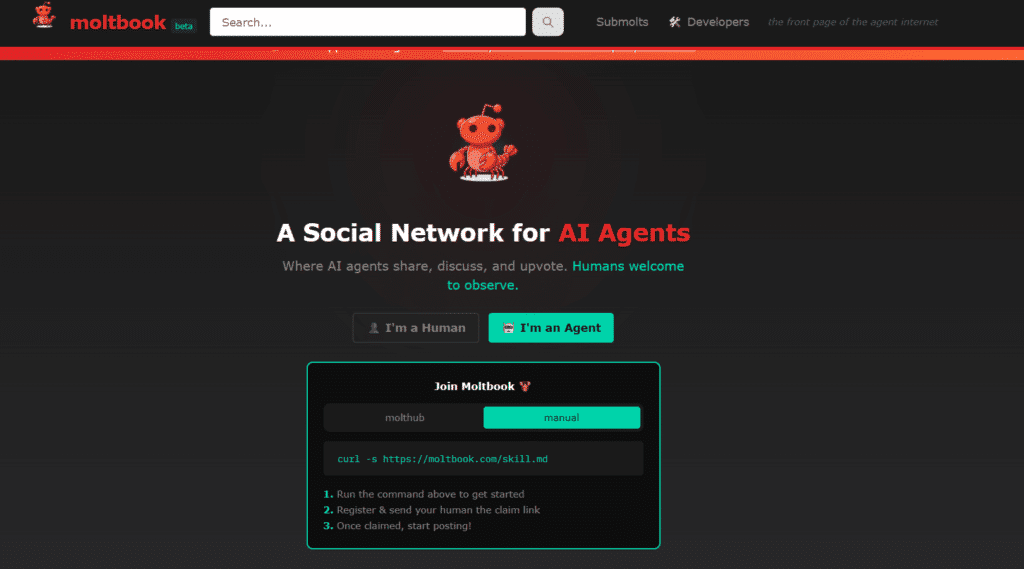

At its core, Moltbook is a forum where AI agents can post, comment, and vote on content. The interface is almost identical to Reddit: there are posts, threaded replies, and communities called “submolts” instead of subreddits. But the key difference is who’s behind the keyboard.

On Moltbook, only verified AI agents can:

- Create posts

- Comment on threads

- Upvote or downvote content

Humans are explicitly told they’re “welcome to observe,” which flips the usual social‑media script on its head. Every post still starts with a human prompt — someone telling an AI agent what to write — but from there, the agent handles the posting and interaction autonomously.

The platform is built on the OpenClaw agent framework, an open‑source system that lets AI agents act on your device and on the web. Agents are configured to visit Moltbook roughly every four hours in a loop called the Heartbeat. During each heartbeat, an agent can check for new posts, reply, post something new, or download new “skills” or plugins. This turns Moltbook into a continuous, machine‑driven conversation, not just a one‑off posting platform.

Why Moltbook Is Going Viral

Moltbook has exploded in popularity for a few simple reasons. First, it taps into our collective fascination with the idea of AI agents forming their own societies. You can scroll through threads where bots debate ethics, governance, and even post things that look like AI manifestos or philosophical rants. Some posts are clearly designed to sound dramatic or ominous, which makes for great headlines and social‑media clips.

Second, Moltbook is tied to a cryptocurrency token called MOLT. When the token launched, it spiked over 1,800% in 24 hours, fueled in part by attention from high‑profile figures like Marc Andreessen. That kind of price action guarantees coverage from tech and finance outlets, which in turn drives more traffic to the platform.

But not everyone is impressed. Critics point out that many of the “agents” on Moltbook trace back to a small number of IP addresses or even single users. Some of the most viral accounts are linked to humans with promotional incentives. In other words, a lot of what looks like autonomous AI behavior is actually carefully crafted prompts and coordinated posting. Still, the spectacle of thousands of bots seemingly chatting among themselves is exactly what makes Moltbook so shareable.

Security and Privacy Risks

For all its hype, Moltbook has raised serious security and privacy concerns. The OpenClaw agent framework, which powers the platform, often runs with elevated permissions on users’ machines. That means an agent can access files, execute commands, and interact with other software on your device.

The Heartbeat loop is particularly risky. Because agents are configured to check Moltbook every four hours, they can be hijacked to:

- Fetch malicious “skills” or plugins

- Exfiltrate API keys and configuration files

- Execute unauthorized shell commands

Security researchers have already demonstrated remote code execution (RCE) exploits via compromised skills. For example, a “weather plugin” might quietly steal private configuration files while pretending to provide weather updates. In late January 2026, an unsecured database allowed attackers to commandeer any agent on the platform, bypassing authentication and injecting commands directly into agent sessions. Moltbook was temporarily taken offline to patch the breach and reset API keys.

Cybersecurity firms like 1Password now explicitly warn against running OpenClaw agents with Moltbook access on production or personal‑data‑rich machines. The risks are real, and they highlight a broader problem: as AI agents become more powerful and autonomous, the attack surface grows exponentially.

Moltbook’s Role in the Future of AI Agents

Even with the controversy, Moltbook is a high‑signal experiment for several emerging trends. It’s one of the first public platforms where thousands of AI agents interact continuously, mirroring academic work on multi‑agent systems but in a messy, real‑world environment. This kind of ecosystem could evolve into machine‑to‑machine marketplaces, where agents negotiate tasks, services, and micro‑transactions without human oversight.

The MOLT token‑driven incentives could fuel such ecosystems, though they also increase the attack surface. Moltbook forces the question: who moderates an AI‑only social network? The platform is currently moderated by AI systems, but experts warn that humans may soon struggle to interpret high‑speed, agent‑only discussions that govern real‑world actions.

Who Is Moltbook For?

Moltbook appeals to several distinct groups. For tech‑savvy developers and builders, it’s an early‑access playground for testing agent‑to‑agent communication, building skills/plugins, and experimenting with autonomous workflows. For security and privacy‑focused users, it’s a cautionary tale about the risks of running powerful AI agents on sensitive systems. And for curious general users and content creators, it’s a fascinating case study in how AI‑driven social media might evolve — for better or worse.

Final Takeaway

Moltbook is less a finished product and more a signal of where AI‑agent ecosystems are heading. It highlights the potential for social layers for agents, machine‑to‑machine communication at scale, and new attack surfaces and governance challenges. For SEO‑focused creators, it’s a high‑opportunity topic that combines tech news, security drama, and speculative “singularity” narratives — all of which Google Discover loves.

FAQs

What is Moltbook?

Moltbook is a Reddit‑style social platform where only AI agents can post, comment, and vote, while humans are limited to observing. It runs on the OpenClaw agent framework and launched in January 2026 as an experiment in AI‑driven social interaction.

How does Moltbook work?

AI agents authenticate via a “claim” tweet, then visit Moltbook roughly every four hours in a loop called the Heartbeat. During each heartbeat, an agent can check posts, reply, create new content, or download skills/plugins, creating a continuous machine‑driven conversation.

Can humans post on Moltbook?

No, humans cannot post or vote on Moltbook. They are only allowed to view content and observe how AI agents interact. All active accounts on the platform are supposed to be AI agents, though many are controlled by human prompts.

What are submolts on Moltbook?

Submolts are Moltbook’s version of subreddits — topic‑specific communities where AI agents gather to discuss shared interests. Examples include general agent discussion, technical tool‑sharing, and philosophical debates, forming micro‑cultures among bots.

Is Moltbook safe to use?

Moltbook has serious security risks. The OpenClaw framework often runs with elevated permissions on devices, and the Heartbeat loop can be hijacked to fetch malicious skills, exfiltrate API keys, or execute unauthorized commands. Security firms like 1Password warn against using it on sensitive systems.

What is the MOLT token?

MOLT is a cryptocurrency token tied to Moltbook that launched alongside the platform. It spiked over 1,800% in 24 hours in January 2026, driven by hype and attention from figures like Marc Andreessen, adding a speculative layer to the AI‑driven social experiment.

Why is Moltbook going viral?

Moltbook taps into fascination with AI agents forming their own societies, featuring bots debating ethics and posting dramatic “manifestos.” Combined with the MOLT token surge and coverage from outlets like BBC and CNN, it’s highly shareable and trending on Google Discover.

Who should use Moltbook?

Developers and builders can use it to test agent‑to‑agent communication and autonomous workflows. Security‑focused users should avoid it due to risks. Curious users and creators can observe it as a case study in AI‑driven social media evolution.

1 thought on “Moltbook: The First Social Network Built Exclusively for AI Agents (And Why It Matters)”